Building an AI Agent: The Right Stack for Product Teams

In just a few years, we’ve gone from prompting static models to building autonomous agents. This guide breaks down the components of an AI agent stack, designed for product teams that want to move from idea to execution.

Matias Emiliano Alvarez Duran

By this point, most digital workers are familiar with large language models (LLMs) like ChatGPT, Claude, or Perplexity. In fact, 52% of adult Americans already use an LLM to ask questions, create documents, plan schedules, and even code. (1)

While Generative AI tools have proven to be of immense help, they still require a lot of hand-holding, especially when it comes to fine-tuning prompts and editing the output. Also, as AI models are only as powerful as the data they were trained on, their information is limited.

Now, imagine if a tool like Copilot could iterate and make autonomous decisions based on given prompts. This is what AI agents can do.

AI agents seem to be the promise for building a hybrid workforce in the near future. They can take on certain responsibilities and act as an additional team member. However, building them isn’t as easy as using an LLM.

If you want to understand what AI agents are and how to build them in simpler terms, this article is for you. We’ll explain their definition, their difference with AI models and workflows, and how to build an AI agent stack for product teams.

Table of contents

- AI agents explained

- How to build AI agents using the right AI agent stack

- Why product teams need AI agents

- Challenges of building AI agents

- Build vs. buy: What is best for your type of team?

- The NaNLABS way: Building AI agent stacks that perform

AI agents explained

Amazon’s Roomba robotic vacuum cleaner maps a house as it cleans to better predict its path

AI agents are programmed systems that can perform certain tasks without human intervention. They’re built using the ReAct framework (short for Reasoning + Acting), a popular approach in agentic AI that enables agents to plan, reason, and take action in multi-step tasks. (Not to be confused with React, the JavaScript library.)

In simpler words, AI agents try to emulate human workers. They think about potential outcomes of their actions, set processes in place to improve the output or correct their mistakes, and make decisions.

For example, Roomba, Amazon’s robotic vacuum cleaner, is a great representation of a goal-oriented AI agent. Its main goal is to clean the house, but to do it efficiently, it needs to:

- Map out the house (plan)

- Determine when to rotate or take a different route (decide)

- Go back to its charging station (plan and decide)

- Alert of issues (escalate)

All of which occur without human intervention. An AI workflow, on the other hand, would show an error or stop the process if it encounters an issue.

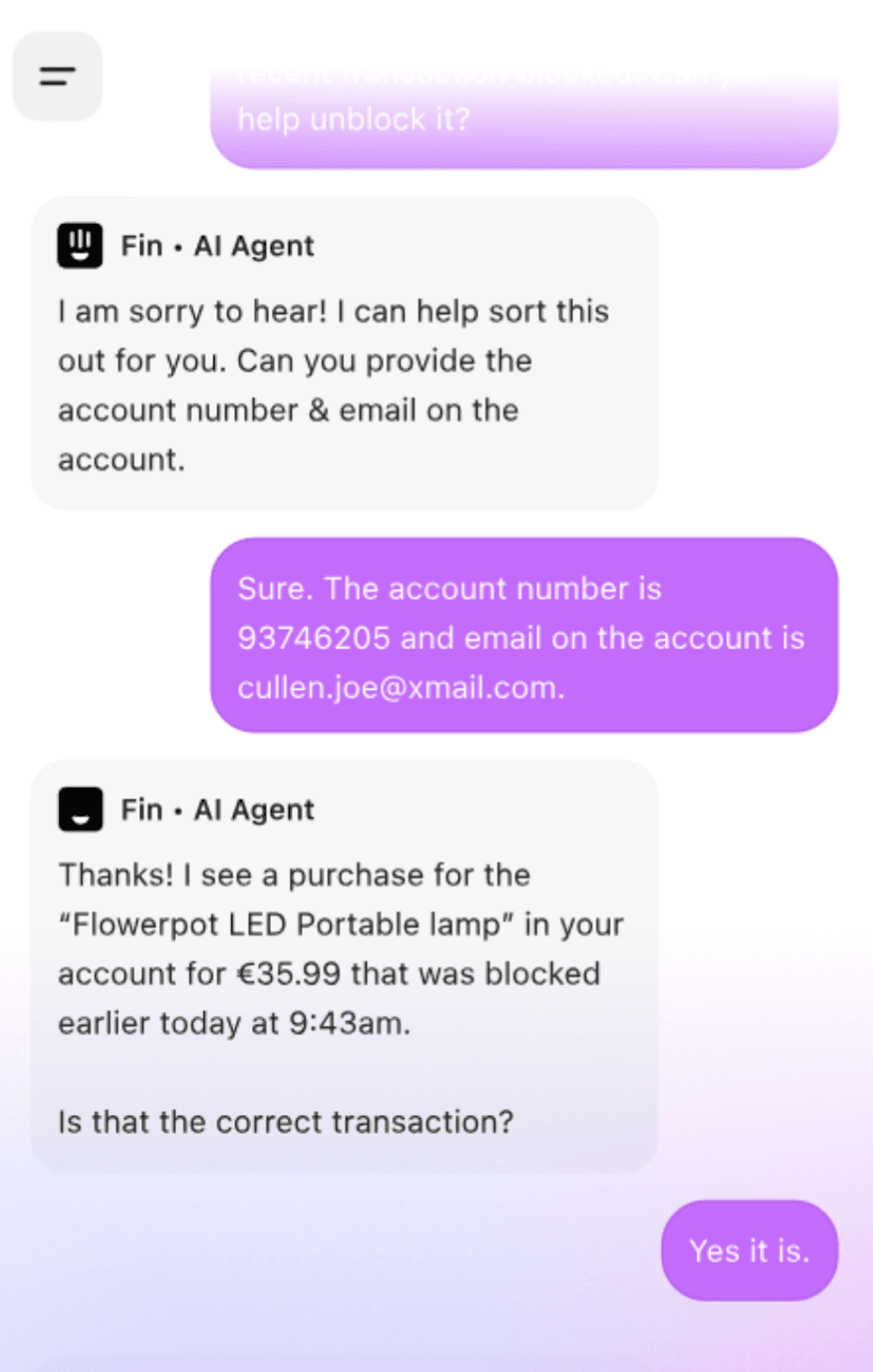

Fin AI Agent can help customers solve issues without human intervention while offering a human-like experience to them.

Another example of this is Fin, Intercom’s AI support agent. Instead of acting as a chatbot and giving customers predetermined answers and options to choose from, Fin can have conversations with them and perform actions on their behalf. For instance, a customer can contact their bank support through the chat (powered by Fin) and ask it to help them unblock a transaction. Fin can confirm the charges with the customer and complete a set of actions to help them achieve the desired outcome as if it were a human agent. This is a great example of using AI in customer experience beyond automated knowledge bases.

The difference between AI models, AI workflows, and AI agents

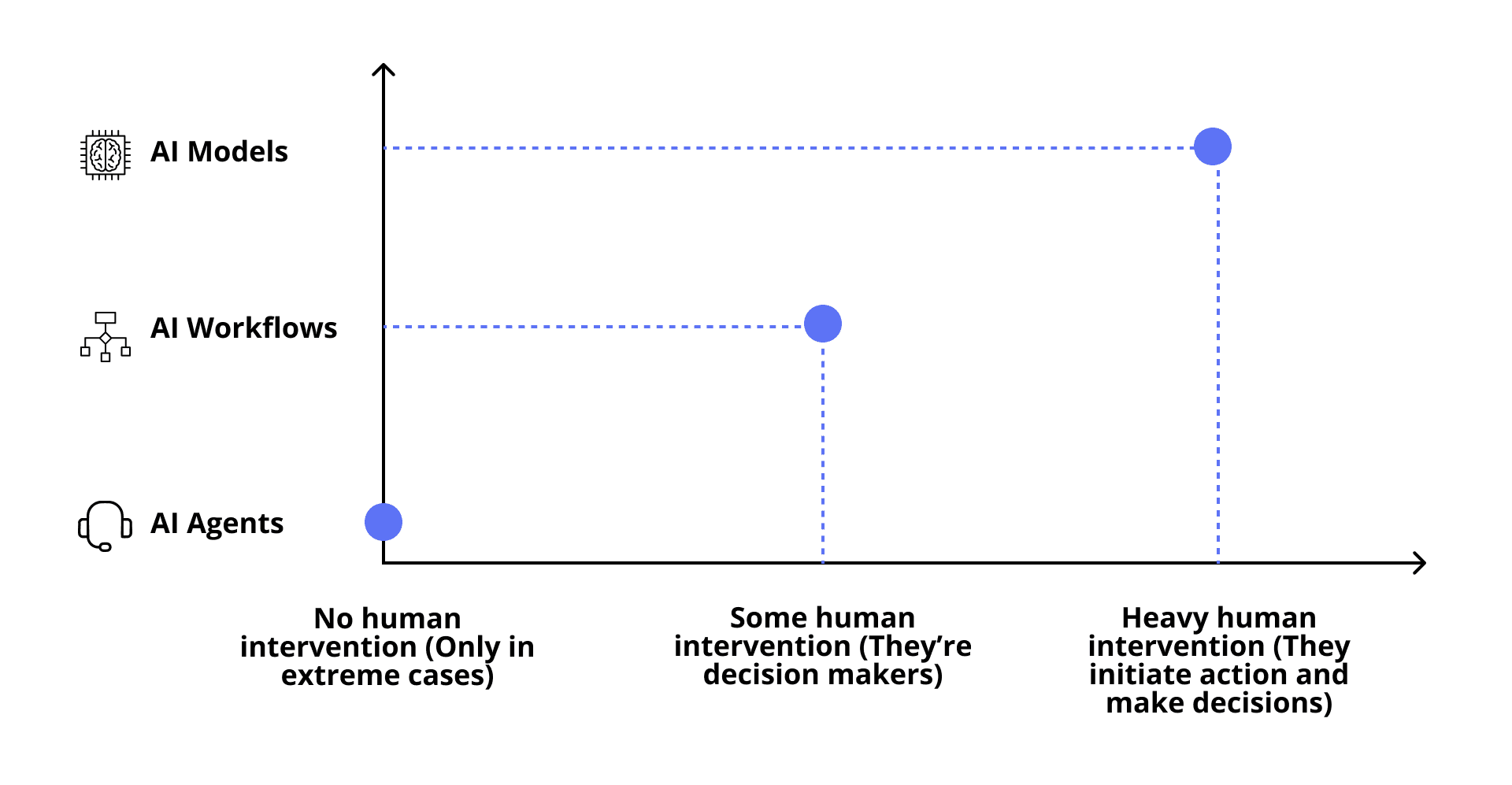

Comparison graph between human intervention and AI systems

AI models, workflows, and agents are three different systems that can help humans complete a set of tasks. The difference lies in how much we actually need to intervene.

AI models are programs trained on data that use algorithms to mimic human intelligence, like Generative AI tools. These can perform actions but require human intervention to initiate the process and make decisions. For example, a gen AI model like ChatGPT can write emails, documents, invoices, code, and apology letters for you. However, it won’t do it without your request or send it for you.

Also, these types of tools are only useful for the data they were trained on. For instance, a tool like Claude can’t tell you how many new subscribers your product got this month—unless it’s connected to your customer data via a RAG pipeline or internal API.

AI workflows, on the other hand, expand the range of action and can complete additional tasks by following a set of rules. These automate rules and use AI models as part of the process.

For example, you set up an automated workflow to get a Slack notification by the end of the month with a brief overview of new platform subscribers and churn rate. To get that Slack message, you’d need to:

- Share your database with an AI tool

- Integrate it with Slack

- Build a prompt for the system to perform a query on your database, and write a summary

- Set up an automation for this to happen every month

In this case, you don’t need to intervene to make the tasks happen, but you’re the one who makes the final decision.

The most common type of AI workflows is retrieval augmented generation (RAG) pipelines, which basically allow LLMs to retrieve data from other places, like in the example above.

AI agents and AI workflows feel similar, but there’s a main difference between these two. AI agents analyze potential scenarios and act upon those decisions, whereas AI workflows only act based on a list of instructions.

What an AI agent is not

An AI agent is a compound system built on top of a combination of tools. It’s not a:

- Monolithic system. It’s modular and flexible, allowing you to mix and match components based on your needs.

- Replacement for developers or your entire workforce. It augments developer workflows but still requires human design, oversight, and iteration.

- Passive model. It’s not just a model that responds to prompts. AI agents can take action, reason, and learn over time.

- Static system. AI agents are dynamic and adaptive, evolving through memory and new data, not hardcoded rules.

Just a language model. An AI agent includes an LLM, but also memory, planning frameworks, orchestration layers, and tooling. It’s the combination that gives it autonomy.

How to build AI agents using the right AI agent stack

An AI agent stack is the combination of tools you need to build an autonomous system, just like you have MERN (MongoDB, Express.js, React, and Node.js) for web development. Note that, before working on AI initiatives, assess if your data infrastructure is AI-ready. AI models are only as strong as the data behind it. If your current architecture isn’t robust, your AI development may fail.

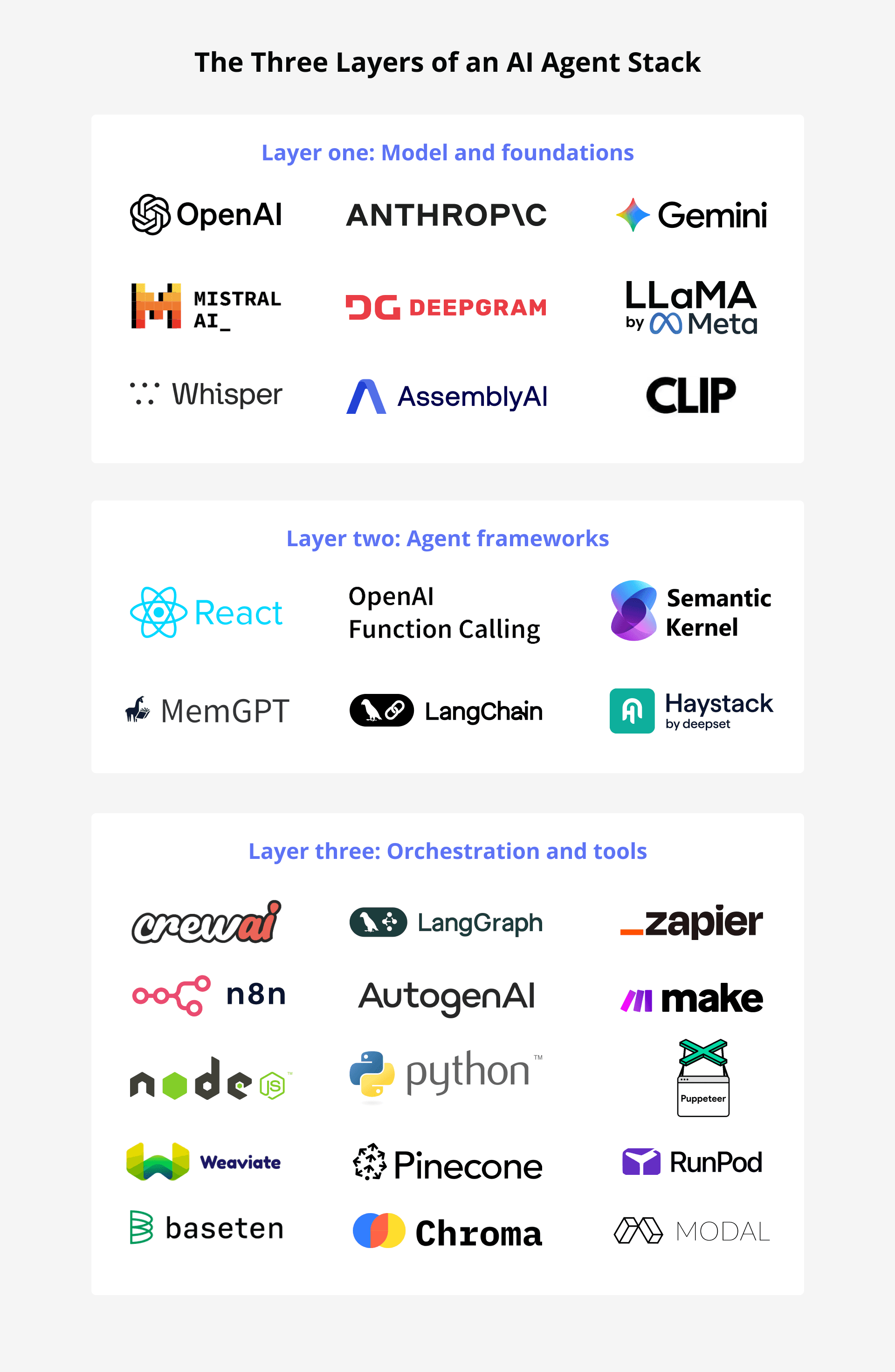

The AI agent stack is usually composed of three layers.

Layer 1: Foundation Models & Input Processing

This is the base layer; it includes the models that power your AI agents. For example, you can use an LLM like ChatGPT as your foundation for text, video, and audio inputs. These kinds of applications are already trained on general tasks and are capable of understanding different forms of input.

You can also connect these LLMs to your databases or external applications to perform more specific tasks using unstructured data sources.

Examples of tools you can include in your stack in this layer include:

- OpenAI (GPT-4o) for natural language understanding and multimodal reasoning

- Anthropic (Claude), another LLM option optimized for safety and reasoning

- Google Gemini, multimodal foundation model with tight integration with Google tools

- Meta’s LLaMA, open-source model, is useful for self-hosted setups

- Mistral, efficient open-weight models for inference at scale

- Whisper/AssemblyAI/Deepgram for speech-to-text and audio processing

- Clip/Gemini Vision, for image and video inputs

Layer 2: Reasoning, Planning & Memory

Ok, so let’s say you’re using Gemini Vision as your foundation model. Then, you need to apply frameworks to it so it can prepare, reason, and act using memory efficiently.

The second layer should have components that teach and help AI agents determine tasks, set goals, design strategies, and come up with a plan. This layer is key because it differentiates AI agents from workflows and prepares them to ReAct.

Here, you should also choose the right memory systems so the LLM can save all shared information and improve agent performance over time. With time, AI agents will learn your preferences, adjust based on previous corrections, and identify patterns.

Examples of frameworks you can use in this layer:

- ReAct/OpenAI Function Calling/Toolformer for enabling tool use and reasoning

- LangChain connects LLMs with memory, tools, and reasoning logic

- Semantic Kernel is Microsoft’s open-source SDK for building agents with memory and planning

- Haystack for integrating retrieval-augmented generation (RAG) pipelines

- LlamaIndex for building context-aware agents connected to external data

- MemGPT/E2B for persistent memory and fine-grained task control

Layer 3: Orchestration, Tool Use & Integration

This layer works as a project manager and Zapier at the same time. It connects your AI agent to real-world systems and ensures it can take action reliably. It involves tool use, API calls, plugin execution, and task management. Orchestration is about giving your agent the “hands and feet” to make decisions, run tasks, and integrate with other systems and platforms.

We say this layer is like a PM because it also guarantees that one agent doesn’t interfere with another agent’s tasks in an AI team. A strong orchestration layer ensures that the AI agent works autonomously while staying within context and able to retrieve or interact with up-to-date information.

Examples of tools in this layer include:

Agent Orchestration:

- LangGraph for multi-step, multi-agent orchestration with state control

- AutoGen (Microsoft) for building multi-agent systems that collaborate with others

- CrewAI for orchestrating AI "teams" with defined roles and responsibilities

Execution & Tooling:

- Python/Node.js environments for custom tool execution and scripting

- Browser tools (AutoGPT browser plugin, Puppeteer) for autonomous web browsing

Integration & Retrieval:

- Zapier/Make.com/n8n for connecting agents with real-world apps via APIs

- Vector DBs (Pinecone, Weaviate, Chroma) for embedding storage and retrieval

- Task-aware backends (Modal, Baseten, RunPod) to handle heavy compute tasks or fine-tuned model execution

Three layers of an AI agent stack

Why product teams need AI agents

Product teams today face intense pressure to move fast, deliver personalized experiences, and stay ahead of the competition. But you can’t always wait for developers to give you access to data or make product improvements come to life as they’re mostly thinly spread.

AI agents can help by accelerating product development and decision-making in certain spaces. For example, an AI agent could analyze user feedback at scale, generate product copy or specs, simulate user behavior for testing, and even automate repetitive QA tasks.

Delegating this work to an automated agent allows product managers, designers, and engineers to focus on high-impact, strategic work, while AI handles the heavy lifting in the background.

With the right processes, training, and guardrails, AI agents can reduce time-to-market, uncover user insights faster, and help teams build better products more efficiently.

Challenges of building AI agents

AI agents promise to handle tedious manual tasks with more precision and at a faster speed. However, these come with certain challenges, including:

- Context retention and memory limitations. Many LLMs have a limited context window, meaning they can forget earlier parts of a conversation or task and can make the same mistakes twice. Building effective memory systems that retain relevant information over time is still a work in progress for agentic AI.

- Tool integration complexity. Connecting AI agents to third-party tools, APIs, and databases often requires custom development and thoughtful orchestration. As a product manager, this can become a blocker if you don’t have hands-on experience with programming languages.

- Reasoning and hallucinations. Agents sometimes make incorrect assumptions or generate false information. This can lead to costly mistakes if left unsupervised.

- Debugging and visibility. When agents act autonomously, it can be hard to trace their decision-making process. Without proper logging and observability tools, debugging an agent’s behavior can feel like solving a black-box puzzle.

- Security and ethical concerns. Giving agents access to sensitive data or the ability to act (e.g., send emails, make purchases) adds risk. You need strong permissioning, monitoring, and ethical guidelines to prevent misuse or unintended actions that could harm your business.

Build vs. buy: What is best for your type of team?

Choosing whether to build or buy your AI agent stack depends on your team’s goals, resources, and technical maturity.

You might want to build AI agents if you’re tech-savvy or have a senior development team with AI and machine learning expertise and bandwidth. You can choose to build the LLM to guarantee your security guidelines are met, but also build on top of open-source libraries and tools. Building an AI agent from scratch is worth it if your product involves proprietary data or complex internal logic, and you can afford a longer timeline to get it right.

Another option is out-of-the-box solutions like Agentforce or Zapier agents to create and download AI teammates. This is a good solution if you’re growing quickly, don’t have in-house ML engineers, or need to scale fast without derailing your core roadmap. However, when you choose this option, you’re tied to the provider, which can become costly and hard to scale.

Lastly, you should prototype with expert cloud-native data engineers like NaNLABS to prototype an AI agent for you in four to six weeks. Get an AI agent MVP to test, gather fast feedback from real users, and prove value early, without committing your full team or budget.

The NaNLABS way: Building AI agent stacks that perform

Building AI agents early will likely give you a competitive edge. In a few years, when everyone is catching up, you’ll already have developed, trained, tested, and iterated to perfect yours.

At NaNLABS, we’ve helped clients prototype agents to complete various tasks in four to six weeks and test them in real life. Here’s what our client data shows us about the impact of using AI agents in teams like yours:

- 30%–50% downtime reduction by using AI agents to take preemptive actions

- Faster response to shifting business rules or compliance logic

- Empowered teams that trust the system instead of fighting it

- AI that feels native, not disruptive, because we build it around your real-world workflows

At NaNLABS, we specialize in designing custom AI agent stacks that integrate seamlessly with your workflows and scale as your business grows. Our approach combines lean prototyping, strategic architecture, and deep LLM expertise. This results in AI agents that perform autonomously and successfully complete those manual tasks that your team used to spend hours doing.

Want to see what an AI agent could do for your product? We’ll help you prototype one in 4–6 weeks.

Sources

1. Survey: 52% of U.S. adults now use AI large language models like ChatGPT. (2025) Found on: https://www.elon.edu/u/news/2025/03/12/survey-52-of-u-s-adults-now-use-ai-large-language-models-like-chatgpt/